The editor of eCCO Magazine would like to draw readers’ attention to the article published by Dominik Mate Kovacs, Co-Founder of Defudger.

What is the Media Doing Against Disinformation Today?

The current stance towards tackling fake news within the Media Industry from the perspective of a startup founder.

Disinformation is misleading information created, presented, and disseminated for economic gain or to intentionally deceive the public. It may have far-reaching consequences, cause public harm, be a threat to democratic political and policy-making processes.

In this article series, I am going to talk about the problems our team faced during the journey towards media content detection from late 2018 until today.

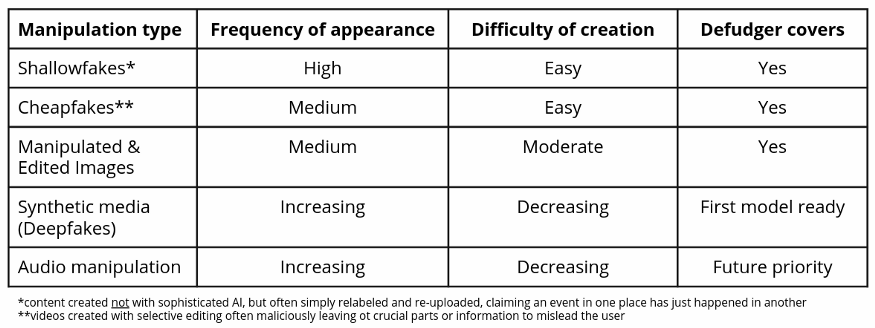

Defudger offers the one-stop solution for visual content fact-checking: we simplify the process and shorten the time spent on collecting background information and analyzing the content for inconsistencies. Therefore, humans have more time to make a qualified judgment and to do more fact-checking as the main problem is that not enough content is checked right now.

As a regular startup, we had several assumptions and during our experiments, I had to face that most of our assumptions about the media industry and the threat of fake news were wrong. However, it is very important to say that this stands for today, many of these can change within months or years.

Below, I am going to explain the current stage of fake news detection from the perspective of the market. I mention all the assumptions we had and why they were wrong. In a follow-up article, I will talk about product and technological limitations.

Deepfakes are not such a threat as of now

Deepfake is a form of synthetic media content generated by AI that presents something that did not happen or someone saying something that they never actually said. The wider availability of computing power and the development of AI technology accelerated the commodification of Deepfake technology.

When we started Defudger in 2018, we were so sure that the Deepfake Threat is going to come and we must get ready with a working solution as soon as possible to be the first movers in the market. Although, we were doing our best to build an outstanding Deepfake detection software, we had to face that media companies were initially more interested in forged images. The reason seemed to be due to the scarce amount of incidents when Deepfakes caused a major social matter. Although there are several cases from fraud to political manipulation, the situation still feels unchanged. Deepfakes truly have the potential to cause harm, so maybe we only have to wait for the first major disruption.

Despite the limited amount of controversial videos, technological development has not stopped. The first Deepfake Detection Challenge ended in May 2020, and there are already rumors about a second round. During the fall, several new Deepfake tools came out, developed by researchers and the community achieving higher and more robust results than ever before. Overall, I cannot say that the field is not advancing anymore.

Media contents come from a known source

Besides Deepfakes, I have to mention doctored photos also known as photoshopped images. There have been several cases of image manipulations (2004 US Presidential Campaign, Spiegel Scandal, National Geographic) already, so I felt this must be the field we as Defudger had to focus on. We hypothesized that content is not validated when it arrives in the media. I urged the team to implement several image detection algorithms into our solution, so we can cover a larger segment of fake content.

Through months this web application was tested with news and media firms until we got some results we were looking for:

Visual content is considered to be validated for most media companies because it is coming from press agencies (DPA, Reuters) and sometimes their own photographers

But how about deploying a tool for the press agencies who are creating the visual content? That is a great question, however, since most of them have their photographers there is rarely demand for fact-checking their content. Although, scandals were happening already, I felt no pressure to use a service like ours. I thought more focus must be placed on social media content.

The important details of social media

Social media content can be served from several sources, according to my experience mostly from Twitter, Facebook, and Instagram.

I interviewed social media heads of German media firms (Der Spiegel, ARD, DPA) and they all emphasized they want to have a close eye on the social landscape because it is particularly useful for trending topics.

When we had social media in the scope of the product, I did not pay attention to the importance of understanding the story of the content. According to our pilot testers, they focused on understanding the people who were behind each post. They would investigate and message them, by doing so trust could build up. In our tool, no feature could create a network of trusted and valid social media profiles.

Talking solely about if the content is manipulated or not, I would distinguish two categories here for the posts themselves:

- Topics that do not provide social importance

- Topics filled with political and major society related issues

For the first category, there is no demand to know the truth all the time. It is understandable since in most cases it carries no risk e.g.: when the actual image is used for entertainment.

It is crucial to focus our tool on the second one. If media firms published images or videos which later turn out to be modified, they could face large troubles. Or could they?

Lack of education

It is a demanding situation for journalists to understand the nature of fake news and the spread of misinformation if they do not have a technical background. Using the current tools for digital forensics requires a pretty serious level of technical understanding. When I realized this, I advised our team to make our product as user friendly as possible.

At the moment, no system can make decisions without human intervention. Until such systems are widely available involved parties should educate their staff and their audience to spot misleading content. Humans are still relatively good at telling fake content apart from authentic when it comes to less perfect forgeries. The general public needs to be educated about the existence of deepfakes, image manipulations, and the opportunities AI technology could bring. Not all deepfakes are malicious. However, because technology makes it so easy to create realistic forgeries, malicious users can easily exploit it to perform attacks.

The demand for legislation

In the western world democracy has a feature called “Freedom of Speech”. Indeed, it is a crucial part of our everyday life, being able to say whatever we want. However, when it comes to charges against the media companies it is not a simple issue to just mute what they want to publish. The European Parliament is working on solving this issue. Germany itself is also doing the same, but from my perspective, these efforts made no results yet. I expected that these laws are already strong and have a huge effect. However, I had to face that if Defudger wanted to seriously pursue the media industry, we have to wait for more legislation.

n my opinion, there could be a significant increase in the value proposition of fake news detection services, if potential charges were higher and stricter. For this to happen, several possible solutions exist ranging from a central authority, similar to what Facebook is doing (but of course fully independent from any company), to a blockchain-based consensus in an ideal world.

Now that I went through the business issues of our story in a future blog post, I will continue with the technological bottlenecks of fake news detection for the media industry.

Thank you for reading my article. If you want to have a discussion or catch up with my recent work follow me here on Medium or let’s connect on Linkedin.

If you have any questions or subjects you would like to discuss further, please leave a response. I will be happy to talk about it!

Dominik Mate Kovacs leads product development and technological research at Defudger and Colossyan. At Defudger, research is pursued with world-class institutions in fake news detection. Outcomes are built into our products for the benefit of society. At Colossyan, our aim is to challenge the status quo, offering highly scalable video generation for businesses and organizations in a fully ethical manner.